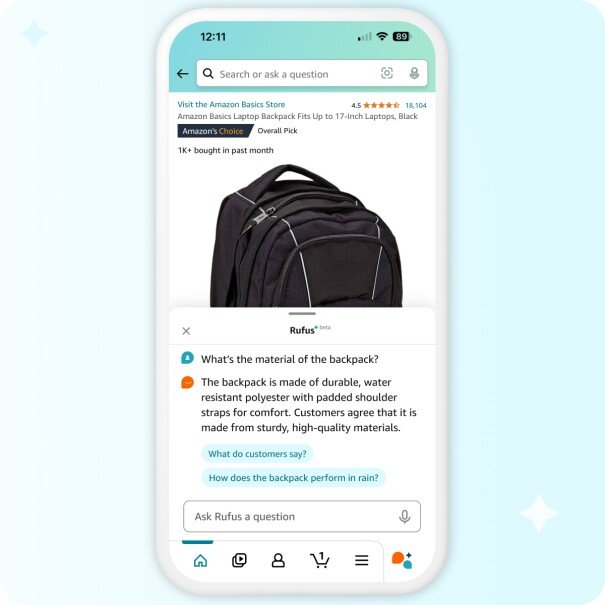

Rufus is the conversational AI shopping assistant embedded in Amazon’s retail app. Launched in July 2024 to millions of customers, Rufus helps people shop more intuitively by answering questions through natural conversation—everything from product details and recommendations, to order history and tracking.

Given the confidentiality of this work, the following provides a high-level summary. Please reach out directly to discuss specifics about my design approach and process.

THE CHALLENGE

Amazon’s catalog spans millions of items across countless categories, brands, and price points. While this is convenient when customers know exactly what they want, it can be overwhelming when they don’t.

Our data science team had developed a large language model (LLM), and one potential application was to help customers explore Amazon’s vast selection through natural-language conversation. For shopping, our overall design challenge was to define a new mental model for how an interface should surface dynamically generated, AI-driven content—and to make that experience feel trustworthy, intuitive, and consistent, even when the model’s non-deterministic output varied from one query to the next.

This was a global challenge for the entire Rufus shopping program. Among a number of scope area, my most important focus was to define the core AI-powered product-finding experience—the shopping flow that helps customers move from broad, open-ended questions to specific product recommendations. In other words, how might we help customers find a needle in Amazon’s haystack?

Adding to the complexity, this new conversational feature needed to integrate into Amazon’s production-scale mobile app, serving hundreds of millions of customers daily. Even small design missteps could disrupt customer trust or conversion, and cause major financial impact. It required tight coordination between science, product, business intelligence, and engineering teams.

How might we help customers find a needle in Amazon’s haystack when they’re not sure where to start?

MY ROLE

Design Leader

Responsible for defining the product-finding and recommendation experience for Rufus, while managing a team of six UX designers across five major scope areas that together supported the full shopping journey:

Customer onboarding

Broad-to-narrow search experiences

Product recommendations

Deals discovery and pricing

Gifting & holiday

Customer service

This case study focuses on one merged scope area—the Broad-to-Narrow Search and Product Recommendation experience—a two-year ongoing effort that became the foundation for Rufus’s core product-finding capability.

KEY PARTNERS

2 Junior UX Designers

1 UX Researcher

3 Principal Product Managers

2 Data Science Leads

2 Engineering Teams

PROJECT CONTEXT

WE SET OUT TO TRANSFORM A RAW PROTOTYPE INTO A COHESIVE, TRUSTWORTHY EXPERIENCE THAT SET OUR FOUNDATION OR AI-POWERED SHOPPING.

When my team joined the Rufus effort, our teams had built a technical proof of concept—a conversational bottom sheet layered over the shopping app. The model could generate responses, but its quality and relevance were low, and the experience hadn’t yet been shaped around customer needs.

I started with a potential three-month runway towards a limited invite-only release. This expanded to a larger soft launch in February 2024, and later accelerated toward full launch by summer 2024. Our goal was to design, test, and ship quickly—balancing invention with the realities of a live e-commerce platform serving hundreds of millions of customers.

AS OUR MODEL CAPABILITIES AND UNDERSTANDING OF HOW TO WORK WITH IT EVOLVED, OUR SCOPE, TIMELINE, AND DEFINITION OF LAUNCH READINESS WERE FLUID. CONSTANT PIVOTS IN DIRECTION, PROCESS, AND TIMING BECAME OUR BASELINE.

A NEW FRONTIER

When my team joined the Rufus effort, our teams had built a technical proof of concept—a conversational bottom sheet displaying a linear chat interface layered over the shopping app. The model could generate responses, but its quality and relevance were low, and the experience hadn’t yet been shaped around customer needs.

WE SET OUT TO TRANSFORM A RAW PROTOTYPE INTO A COHESIVE, TRUSTWORTHY EXPERIENCE THAT SET OUR FOUNDATION FOR AI-POWERED SHOPPING.

Among the many use cases Rufus needed to support, product finding was the most critical for a shopping experience. Yet the product and technical teams were divided into separate scope areas—one focused on broad search queries, another on product recommendations—making it difficult to define a cohesive end-to-end journey. Because all features lived within the same conversational surface, there were no distinct pages or touchpoints to anchor design ownership. What felt like isolated workstreams internally appeared as one continuous experience to customers.

In conversational interfaces, the boundaries between scope areas are fluid. As conversation flows, what we built separately as features flow into each other or combine.

Because the entire program was building features or capabilities that render on the same conversational surface—there were no distinct pages or touch points to anchor ownership. Many of the frameworks for how we develop products and features at scale did not support our new type of experience.

CUSTOMER INSIGHTS

Because we began with a three-month timeframe to release and we didn’t yet have UX research resourcing in place, we did not have time for initial generative research. We had a very tight window to deliver designs for engineering scoping and kickoff. We began with pre-existing relevant data points from work with conversational AI experiences during the past year:

Customer interest and receptiveness to adopting AI assitance ran the gamut. Some were turned off by the idea, though most were open to it, as long as it enhanced their shopping experiences and made it easier.

Customers expected AI shopping assistants to customize the experience by asking the customer questions and giving con before making specific recommendations.

Thought some customer were wary because of privacy concerns, most expected a shopping assistant to remember their long-term preferences so the customer wouldn’t need to explain repeatedly

Customers expected a Shopping AI to carry context over from their previous shopping sessions.

Customers expected a Shopping AI to curate a set of recommendations rather than showing them an endless range. The breadth varied by customer, but about 5 recommendations seemed to be a commonality.

Customers didn’t have a solid expectation for how a shopping AI interface should look or work.

Customers expressed the desire for a choice and control over whether to get shopping guidance or to browse and discover on their own.

EARLY APPROACH

We had two man design problems to solve out of the gate.

1) We first needed to establish clear behavioral cues and visual signposts to help customers understand what Rufus could do, and how to use it. The challenge was to design an interface that could flex with unpredictable model behavior while remaining consistent, intuitive, and trustworthy.

2) Once customer engaged, we needed to determine what content would Rufus show customers and in what form. We had two work streams. Broad queries and product recommendations, which moved forward in parallel as separate workstreams.

INTERACTION MODEL

Our first designs integrated the entry point into conversation with Rufus from a customer’s initial query into the Amazon search field, the way most customers began their shopping journey on our app. Rufus would detect conversational intent from natural-language queries typed into the Amazon search bar. We would need to detect conversational intent from natural-language queries typed into the Amazon search bar, and route those queries to the conversational layer accordingly.

[insert flow: Search —>Rufus layer pops proactively]

PRODUCT FINDING CONTENT

Although we knew a long-term aim was to create a dynamic UI system, to bias towards speed to market, we began with a templated approach. For broad queries, we created a template that focused on recommending product categories. Drawing from a framework we created for shopping intents, we determined three major types of queries: Information-seeking, product-seeking, and customer-service. We focused on the info-seeking and product-seeking queries, while a another team took on customer service scope.

We had a template for text-based queries for info-seeking and card-based layout for product seeking queries. We highlly debated whether these cards should show categories or products or both, and aligned to start with just categories with varying ranges of broadness because of technical constraints.

INTERNAL BETA TESTING

Although our ambitious timelines got our teams mobilized quickly, after an assessment of the overall Rufus program, beyond just this scope area, our leaders decided to delay public release, but to move forward with a controlled internal beta release for testing with Amazon internal users outside of our org. We were able to test run qualitative testing.

Our first prototype assumed that customers would naturally type questions into the search bar, prompting Rufus to appear automatically.

Testing quickly revealed otherwise:

Most customers didn’t use natural language in the search bar.

When Rufus appeared automatically, it felt intrusive and confusing.

The assistant seemed overactive, not intelligent.

We realized that determining “conversational intent” algorithmically was the wrong approach. Instead of predicting when to appear, Rufus needed clear, intentional entry points where customers could choose to engage.

But as we began testing, it became clear that the assistant’s behavior felt unpredictable. Customers didn’t always understand why Rufus appeared or what it was capable of. What we thought would feel “smart” often felt pushy or inconsistent.

That insight led us to reframe the problem—moving from system-driven behavior to customer-invited interaction—the foundation for the next phase of design.

But as we began testing, it became clear that the assistant’s behavior felt unpredictable. Customers didn’t always understand why Rufus appeared or what it was capable of. What we thought would feel “smart” often felt pushy or inconsistent.

Rufus answers questions about detailed product information, and summarizes customer reviews into an easily glanceable format.

Rufus helps customers understand their shopping needs, and compare options.

It assists customers with upcoming deliveries and past orders.

We’re continuing to experiment, enhance, and develop new ideas, monitoring both qualitative and quantitative data as we collect feedback and insight from customers. We’ve just gotten started with harnessing the power of generative AI to take Amazon shopping to a new level of convenience, saving customers time and helping them make more informed purchases.

“Whatever it is, the way you tell your story online can make all the difference.”