Rufus is the conversational AI shopping assistant embedded in Amazon’s retail app. Rufus helps millions of customers shop more intuitively by answering questions through natural conversation—from product recommendations and comparisons to order history and tracking.

Given confidentiality, this summary provides a high level overview of the behavioral, interface, and relevance frameworks behind Rufus’s core product-finding experience.

THE CHALLENGE

Amazon’s catalog spans millions of products across countless categories, brands, and price points. While this breadth offers great choice, it also means that finding the right product requires effort—filtering, comparing, and sifting through reviews. Our data-science team developed a large language model (LLM) to help customers explore this vast selection through natural-language conversation.

Our design challenge was to define a new interaction model for surfacing dynamically generated, AI-driven content—and make the experience feel trustworthy, intuitive, and consistent despite variable model output.

My focus was the core product-finding experience guiding customers from broad, open-ended questions to specific, relevant recommendations. The solution had to balance invention with production-scale reliability across our massive e-commerce site serving hundreds of millions of users.

MY ROLE

As design lead, I oversaw UX design from early ideation to prototypes and launch. Partnering with cross-functional teams to translate emerging model behaviors into human-centered experience, I defined frameworks for interaction, trust, and adaptivity and was responsible for delivery of design assets, specs, and documents, contributing hands-on as needed.

KEY PARTNERS

2 UX Designers

1 UX Researcher

3 Principal Product Managers

2 Data Science Leads

2 Engineering Teams

PROJECT CONTEXT

When I joined, this program had a three-month target for internal release. We went to a soft launch three months after in Feb 2024, and accelerated toward public launch in July 2024.

Working with shifting scope and high ambiguity, a team of two UXDs and I partnered with product, data-science, and engineering leads to prototype model behaviors, test conversational flows, and shape uncertain model outputs into reliable experiences. Everyone on the team was balancing this work amongst other work streams.

When we kicked off, Rufus existed as a technical prototype—a conversational bottom-sheet overlay that could generate responses but lacked behavioral logic or coherence specific to customer use cases and experiential flows.

WITH LITTLE PRECEDENT TO DRAW FROM, OUR GOAL WAS TO TRANSFORM A RAW PROTOTYPE INTO A COHESIVE, TRUSTWORTHY EXPERIENCE THAT COULD SCALE WITH EVOLVING MODEL CAPABILITIES AND CUSTOMER EXPECTATIONS.

REFLECTIVE FRAMEWORK

Since launch, I’ve summarized our thinking into five design frameworks to describe how the CX matured across interdependent layers. This is a reflective synthesis rather than an operational structure.

1. INTERFACE FRAMEWORK — CLARITY AND CONSISTENCY

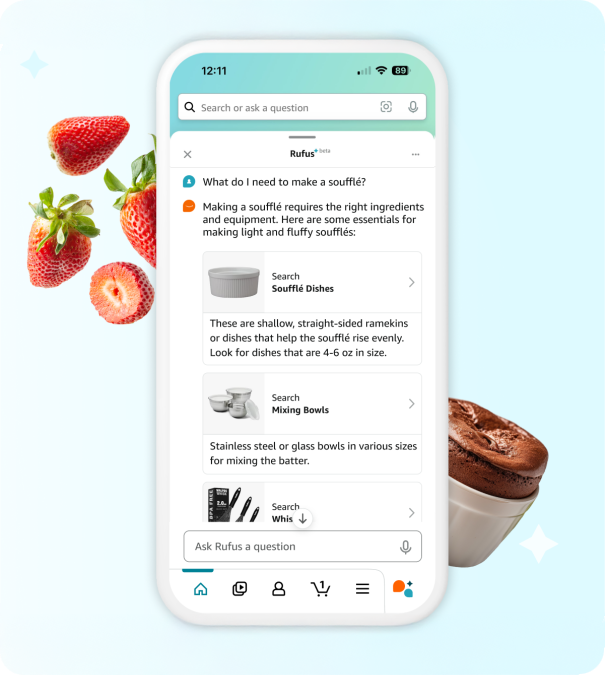

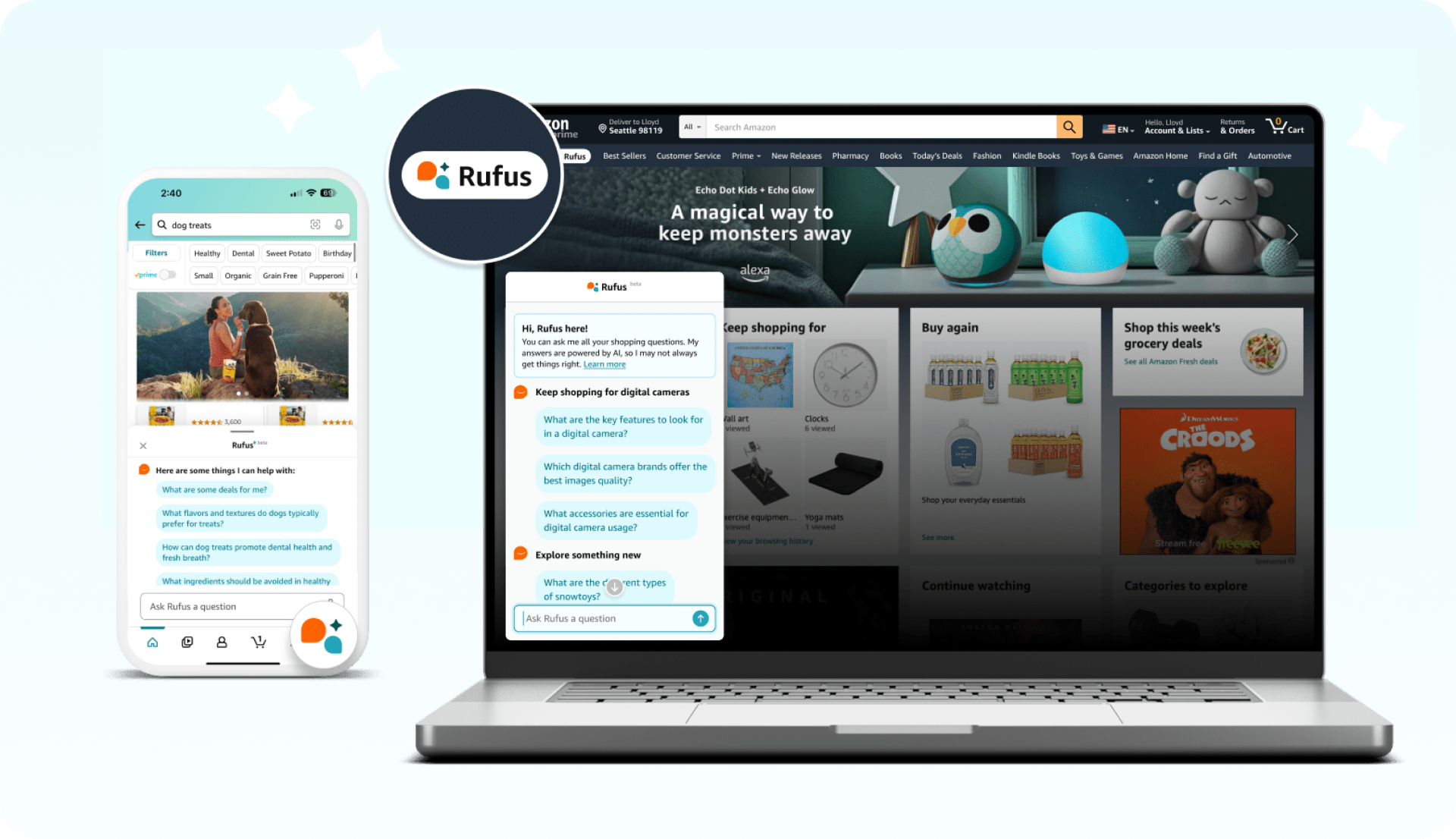

We began by refining our UI framework, evolving the core prototype elements—a conversational bottom sheet, suggested question pattern, and refinement card—into a structured design language.

We defined hierarchy, composition, and interaction patterns for product finding, expanding the component library with product cards, carousels, and other affordances. As Rufus moved from mobile to desktop, we scaled the framework into a unified, reusable system that ensured consistency across modalities.

We established consistent motion and transition patterns to bridge between the layer and store pages, ensuring that moving from chat-based interaction to visual browsing felt natural and continuous. Progressive-reveal micro-animations guided attention and reduced cognitive load, creating a sense of pacing as Rufus surfaced information.

Together, these elements formed a scalable interface framework that provided visual clarity, structural coherence, and a foundation for future multimodal expansion.

2. BEHAVIORAL FRAMEWORK — INGRESS AND ENGAGEMENT

Defining when and how Rufus should engage was one of our most complex design challenges.

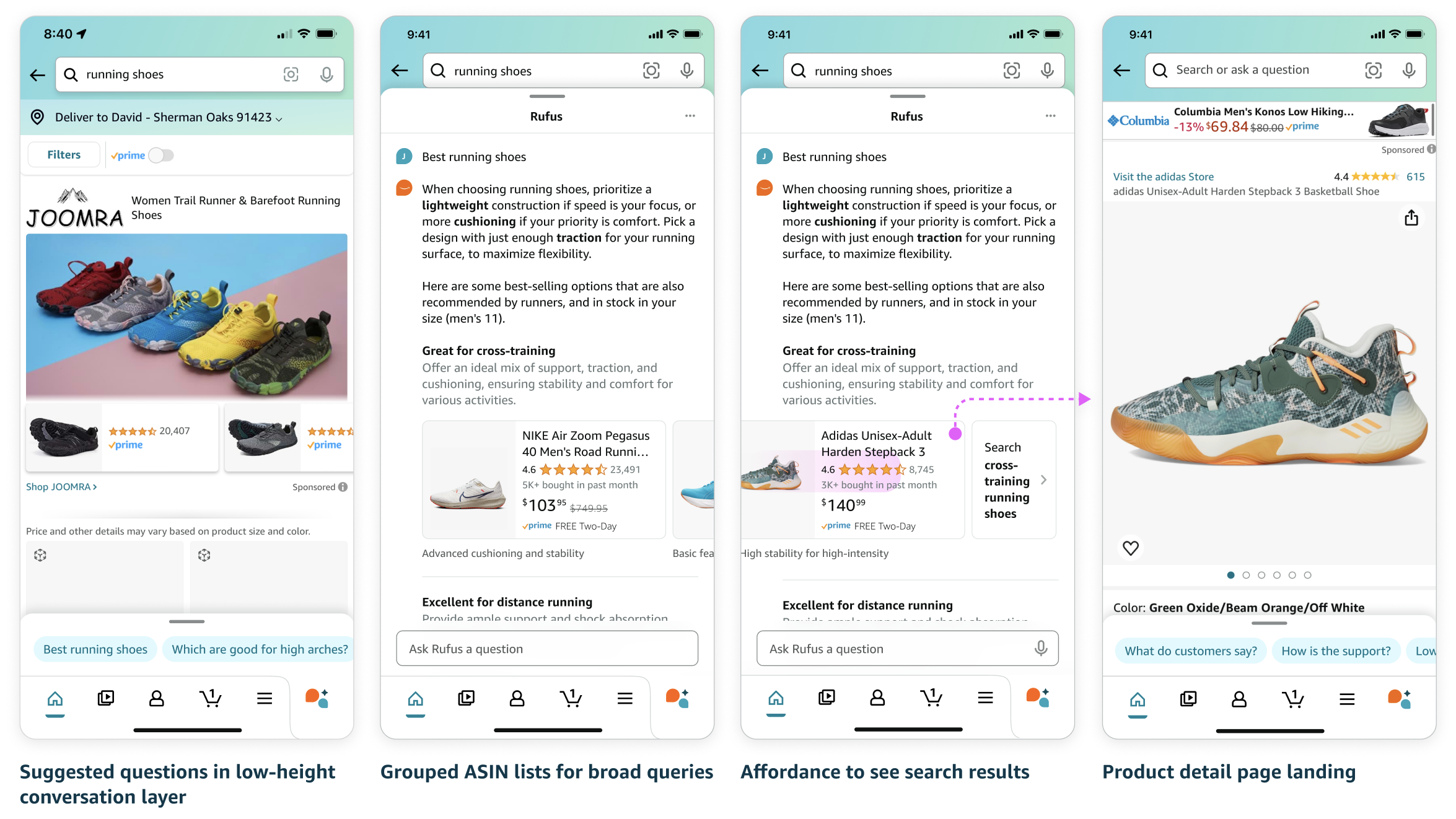

The first designs we released opened the conversational layer whenever a query seemed conversational. Customers found this intrusive and unpredictable—the rate of immediate dismissal of the layer was high. To adjust, we reframed engagement around invitation over interruption, creating a tiered ingress framework aligned to model confidence and query intent:

Invited engagement (low-confidence)

For most queries, Rufus surfaced a low-height bottom sheet with suggested questions that expanded into the full-layer for multi-turn dialogue.Auto-engagement (high-confidence)

For a small set of well-defined queries, Rufus surfaced an answer by auto-opening the layer.Inline experimentation

We later embedded Rufus elements directly into the Amazon app pages to blend conversational guidance with traditional browsing.

MOTION STUDY SHOWING INVITED ENGAGEMENT THROUGH SUGGESTED QUESTIONS. INLINE ELEMENT ALSO SHOWN IN TOP SLOT OF SEARCH PAGE.

This multi-state system balanced intelligence and user control. We created motion prototypes to simulate states and transitions to validate clarity and usability.

DESIGN PRINCIPLE: ENGAGE WHEN CONFIDENT, INVITE WHEN UNCERTAIN

3. RELEVANCE — FROM RESPONSE TO REASONING

Once engagement stabilized, we focused on response quality and transparency.

Early versions of our product-finding flow redirected customers to refined search results. Research, however, showed that customers expected curated product recommendations with explanation.

We iterated on our designs, and worked with our technical partners to overcome hurdles in reaching a high bar on quality of product picks. Evaluating the quality of model output, and guiding the model by refining the model prompt was a critical part of shaping the customer experience.

Early release of Product recommendations flow

improved product recommendations flow

As a cross-functional team, we established a relevance framework that shaped how the model communicates:

Clarify intent with follow-up questions when confidence is low

Visually distinguish between narrowing categories and recommending products

Explain reasoning with concise insights in context

Provide attribution and source visibility

Visualize reasoning during latency (“Rufus is gathering products…”).

These cues built trust and transparency, helping customers understand how Rufus thinks and why it recommended specific products.

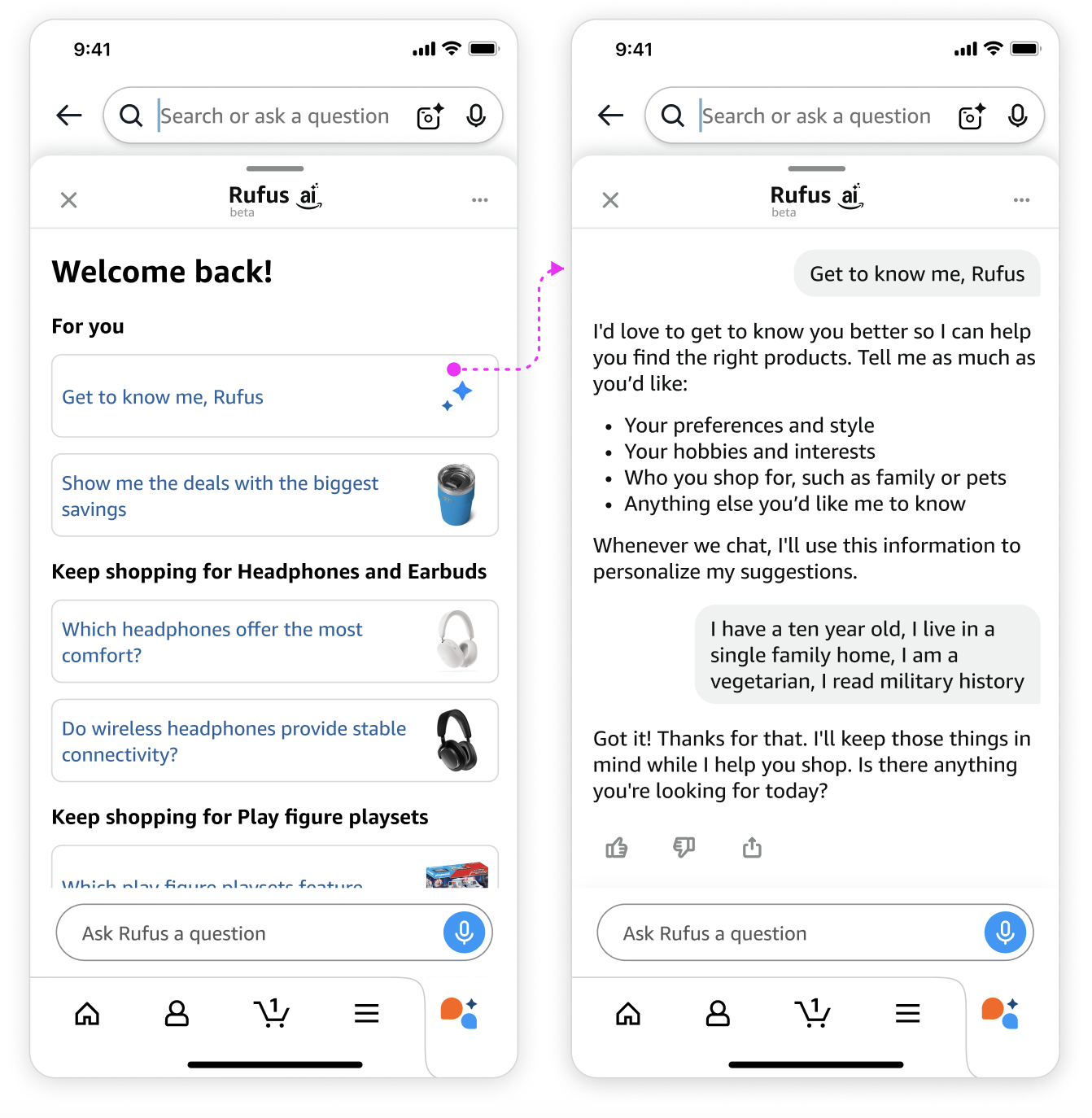

4. PERSONALIZATION AND CONTEXT

User research consistently showed that customers expect an AI assistant to personalize while remaining transparent. I collaborated with our data-science partners to define how personalization signals should inform the LLM—ensuring recommendations feel relevant while still supporting discovery and fairness. The goal was to avoid overfitting or bias while keeping results fresh and transparent.

We refined prompting logic to adapt the generated text and product recommendations based on user context to make clear that the response was personalized. We also designed affordances for customers to manage their preferences, reinforcing control and trust.

DESIGN TAKEAWAY: PROMPTING IS PART OF THE UX TOOLKIT—SHAPING HOW THE MODEL REASONS AND WHAT IT SAYS.

5. ADAPTIVE LAYOUT FRAMEWORK — DESIGNING FOR VARIABILITY

Since generative models produce non-deterministic outputs, we designed a dynamic layout framework for product recommendations allowing the model to adjust aspects of the presentation format in real time:

Text-to-image ratios and component hierarchy

Content density and card grouping rules

Support for mixed media (text, image, video) within consistent formats

This adaptive framework is designed to maintain predictability while allowing for expressive variation across query types. As we work towards implementation, we foresee this as a reusable foundation for other generative experiences across Amazon Retail.

IMPACT

Over the past year since launch, we’ve been improving steadily in both engagement and in the quality of our experience.

Active use increased 40%

Repeat engagement per user is up by 30%

Rate of immediate dismissal of the conversation layer decreased 40%

Perceived helpfulness of our product recommendations increased 4X

Customers are describing Rufus as “helpful,” “personal,” and “saving time.”

“RUFUS IS SO HELPFUL! IT ANSWERS QUESTIONS ON ITEMS SO I DON’T NEED TO READ REVIEWS. IT SAVE ME PRECIOUS TIME.”

REFLECTION

Designing Rufus meant defining how intelligence should behave. Beyond the visual interface, we’re designing the system behavior, including prompting the model with direction on when and how to respond. It’s an intricate collaboration between job functions, experimenting through trial and error to harness our emergent LLM capabilities into an intuitive customer-facing experience.

KEY LEARNINGS

Engage when confident, invite when uncertain. Match proactivity to system confidence.

Expose reasoning. Transparency and attribution build trust.

Design for uncertainty. Adaptive frameworks create stability amid variability.

Prototype behaviors, not screens. Interaction timing and motion reveal usability.

Prompting is design. Language architecture directly shapes perception of the CX.